http://www.marinelink.com/news/cybersecurity-maritime416578

"Cybersecurity incursions and threats to the Marine Transportation System (MTS) and port facilities throughout the country are increasing," said Dr. Hady Salloum, Director of MSC. "This research project will support the missions of the DHS Center of Excellence and the U.S. Coast Guard to address these concerns and vulnerabilities and will identify policies and risk management strategies to bolster the cybersecurity posture of the MTS enterprise."I don't think there is anything unique about the maritime environment when it comes to computer security. It's just like other industrial control situations with the twist that you have people living at the "site." That isn't really even a twist as there are lots of jobs where people live at the site. The main problems I have seen or heard of on ships are no different than just basic computer security issues.

I take this a bit personally because of an incident back in 2002. I was on the RVIB Palmer (NSF's ice breaker) as a scientist. The head of ship board computer pulled me aside one morning with the statement, "If you tell me what you did, I won't press charges." Needless to say, I had no idea what he was referring to. After quite the standoff, he finally revealed that most of the ship's network had gone down. He was accusing me of hacking into ships systems and trashing things. After even more of a standoff, he said the logs show me breaking in. I tried to explain all that I had done on the ship, which was to, with permission from the other tech first, log into a machine with the navigation logs on it and write a shared memory reader program to write out a csv file of the ship's track. He didn't believe me. He said I was the only person on the ship that knew how to do this kind of malicious thing. I finally turned my back on him and walked off. Many hours later, he found me to apologize. He said a Windows virus got in through someone's email and had run rampant on the system. At the time, I only had a linux laptop and was using the Solaris workstations on the ship... he said he now understood that it wasn't me. I very much appreciated the apology with the accompanying explanation, but not his initial reaction.

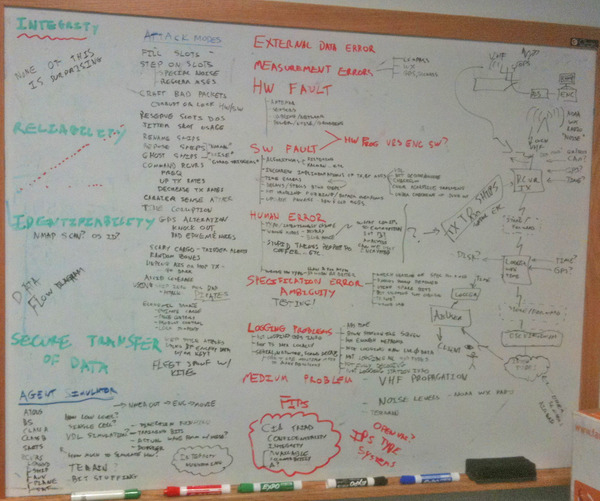

So when it comes to comes to computer security on ships, the things I see are:

- Get the software vendors to provide systems that are better tested and audited. Which ECDIS has user visible unittests and integration tests? Which use all the available static analyzers like Coverity and Clang Static Analyzer?

- Force all the specifications for software and hardware to be public without paywalls

- Consider using open source code where anyone can audit the system

- Switch away from Windows to stripped down OSes with the components needed for the task

- Teach your users about safely using computers

- Run system and network scanners

- Ensure that updates are done through proper techniques with signed data and apps

- Hardware watchdogs on machines

- Too many alarms on the bridge for irrelevant stuff

- Stupid expensive connectors and custom protocols blocking using industry standard tools

Common issues I've heard of:

- Crew browsing the internet and watching videos from critical computers getting viruses

- Updates of navigation data opening machines up for being owned

- Corrupt data going uncheck through the network (e.g. NMEA has terrible integrity checking)

- Windows machines being flaky and operations guides saying to continuously check that the clock changes to machine sure the machine has not crashed

- Single points of failure by banning things like hand held GPSes or mobile devices

- Devices not functioning, that are impossible to diagnose / fix except by the manufacturer

- Updates taking all the ships bandwidth because every computer wants to talk to home

- Nobody on the ship knowing how systems work and the installer has left the company

- TWIC cards... spending billions for no additional security

- No easy two factor authentication for ships systems

- Being unable to connect data from system A to system B cause of one or more reasons

- No way to run cables without massive cost

- Incompatible connectors

- Incompatible data protocols

- Proprietary data protocols (e.g. NMEA paywalled BS)

- Inability to do the correct thing because a standard says you can't. e.g. IMO, IALA, ECDIS, NMEA, USCG, etc. is full of standards designed by people who did not know what they were doing w.r.t. engineering. Just because you are an experienced mariner, doesn't me you know anything about designing hardware or software.

- Interference between devices with no way for the crew to test

- Systems being locked down in the incorrect configuration. e.g. USCG's no-change rule for Class-B AIS

- No consequences incorrect systems and positive feed back systems for fixing them. e.g. why can't it be a part of the standard watch guides to check your own AIS with another ship or the port? The yearly check of AIS settings guideline is dumb.

- People hooking the wrong kind of electrical system to the ship

- No plans to the ship, so people make assumptions about what is where and how it should work. As-builts don't have xray vision. Open ship designs would be a huge win

- Ability of near by devices to access the ship's systems

- And so on....

Sounds just like cars, IOT devices, home automation, etc.