but have not heard from any others.

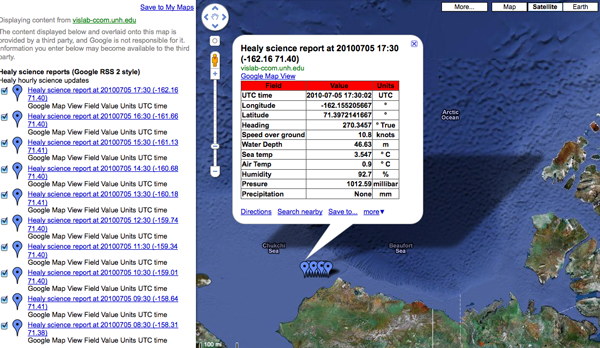

Here is an example of my USCGC Healy feed of science data that is only in HTML table form within the description:

Healy Science feed in Google Maps

It's got some really nice features that include:

- Being pretty simple (when compared to SensorML and SOS)

- You can just throw a URL in Google Maps and see the data

- It works inside normal RSS readers (e.g. my USCGC Healy Feeds)

- It's a simple file that you can copy from machine to machine or email.

- It should be easy to parse

- Points and lines work great

The trouble comes with:

- Validation is a pain, but appears to be getting better

- What should you put in the title and description?

- It seams you have to create separate item tags for points and lines for things like ships

- There is no standard for machine readable content other than the location

Here are my thoughts on the last point. First a typical entry that has info in the title and description:

<item>

<title>HOS IRON HORSE - 235072115: 0KTS @ 17 Jun 2010 16:07:10 GMT</title>

<description>Time: 17 Jun 2010 16:07:10 GMT Speed: 0</description>

<pubDate>17 Jun 2010 16:07:10 GMT</pubDate>

<georss:where>

<gml:Point>

<gml:pos>28.0287 -89.1005</gml:pos>

</gml:Point>

</georss:where>

</item>

This makes for easy human reading of data and works with GoogleMaps and OpenLayers.

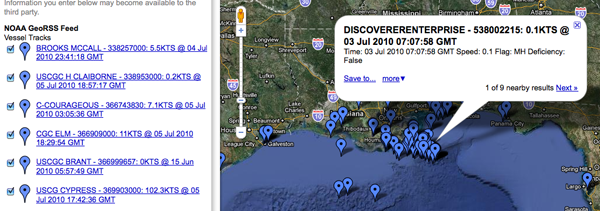

This is how the AIS feed looks in Google Maps... mostly enough to see what is going on. If you know the name of a vessel, you can find it on the left side list of ships. That's a great start, but it is hard to take that info into a database. If the fields change at all in the title or description, all parsers that use that feed MUST be rewritten.

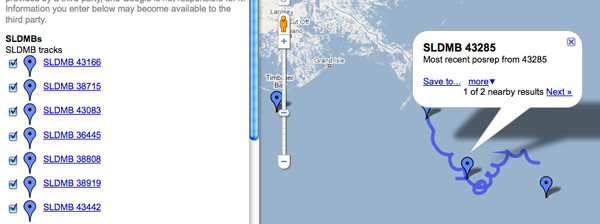

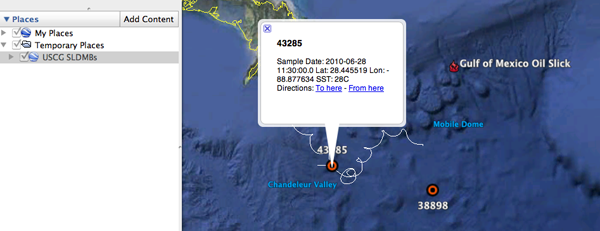

This buoy field shows the opposite idea of putting all the information into fields. It's also missing pubDate and such, but we should focus on the SeaSurfaceTemp and SampleDate. These are more machine readble. The XML and an image:

<item>

<title>SLDMB 43166</title>

<description>Most recent posrep from 43166</description>

<SeaSurfaceTemp>30C</SeaSurfaceTemp>

<SampleDate>2010-06-25 11:00:00.0Z</SampleDate>

<georss:where>

<gml:Point>

<gml:pos>27.241828, -84.663689</gml:pos>

</gml:Point>

</georss:where>

</item>

There are some problems in the sample above. First, the description doesn't contain a human readable version. This causes the GoogleMaps display to give us nothing more than the current position of this

"buoy" (and a recent track history that comes as a separate entry). Ouch. That's hard to preview. Second, the machine readable portion is fine, but I can't write anything that can discover additional data fields if they are added. If someone adds <foo>1234</foo>, is that field a part of something else or is it more sensor data that I should be tracking? A namespace for sensor data would help. Then I could pick off all of the fields that are in the "SimpleSensorData" namespace. But, I have to say that namespaces are good, but a pain. I would prefer a data block, where everything in there is a data field. It would also be good to separate units from the values. Here is how it might look:

<rss xmlns:georss="http://www.georss.org/georss" xmlns:gml="http://www.opengis.net/gml" version="2.0">

<channel>

<title>NOAA GeoRSS Feed</title>

<description>Vessel Tracks</description>

<item>

<title>HOS IRON HORSE - 235072115: 0KTS @ 17 Jun 2010 16:07:10 GMT</title>

<description>Time: 17 Jun 2010 16:07:10 GMT Speed: 17KTS

<a href="http://photos.marinetraffic.com/ais/shipdetails.aspx?MMSI=235072115">MarineTraffic entry for 235072115</a> <!-- or some other site to show vessel details -->

</description>

<updated>2010-06-17T16:07:10Z</updated>

<link href="http://gomex.erma.noaa.gov/erma.html#x=-89.1005&y=28.00287&z=11&layers=3930+497+3392"/>

<!-- Proposed new section -->

<data>

<mmsi>235072115</mmsi>

<name>HOS IRON HORSE</name>

<!-- Enermated lookup data types -->

<type_and_cargo value="244">INVALID</type_and_cargo>

<nav_status value="3">Restricted Maneuverability</nav_status>

<!-- Values with units -->

<cog units="deg true">287</cog>

<sog units="knots">0</sog>

<!-- Add more data fields here -->

</data>

<!-- The meat of GeoRSS -->

<georss:where>

<gml:Point>

<gml:pos>28.0287 -89.1005</gml:pos>

</gml:Point>

</georss:where>

</item>

<!-- more items -->

</channel>

</rss>

Perhaps it would be better inside of data to have each data item have a <DataItem> tag and inside have a name attribute.

<data_value name="sog" long_name="Speed Over Ground" units="knots">12.1</data_value>

Or we could just embed json within a data tag... but that would be mixing apples and oranges. If we start doing json, the entire response should be GeoJSON.

For referece, here is what one of those blue tails looks like in the GeoRSS:

<item>

<title>SLDMB 43166 Track</title>

<georss:where>

<gml:LineString>

<gml:posList>

27.241828 -84.663689

27.243782 -84.664666

27.245442 -84.66574

27.246978 -84.666779

27.248248 -84.668049

27.250104 -84.669318

27.251699 -84.670985

27.253158 -84.673045

27.254232 -84.6749

27.255209 -84.676561

27.256076 -84.678023

</gml:posList>

</gml:LineString>

</georss:where>

</item>

There was also an assertion that KML would be better. However, I would argue against KML as a primary data transport mechanism. KML is a presentation description, not a data encoding format. It's much like when I ask someone for an image and they take a perfectly good JPG, TIF, or PNG and put it in a PowerPoint... sending me only a PowerPoint. That can be useful, especially if there are annotations they want on a final, but I must have the original image or a lot is lost.

Looking at the KML, you can see that we have all the same problems with data being delivered by GeoRSS.

<Placemark>

<name>38681</name>

<description>Sample Date: 2010-07-02 18:00:00.0 Lat: 30.247965 Lon: -87.690424 SST: 35.8C</description>

<styleUrl>#msn_circle</styleUrl>

<Point>

<coordinates>-87.690424,30.247965,0</coordinates>

</Point>

</Placemark>

It's too bad that in Google Earth, you can't put a GeoRSS in a Network Link.

In case you are curious, wikipedia has an entry on SLDMBs: Self-Locating Datum Marker Buoy